Bugfixes/Improvements

- [UI] Fixed a problem with WindowListProvider - when title got updated, EA lost window for 1 frame

- [Scripting] Memory API - Added new methods

GetThreads,VirtualQueryandGetMemoryRegions - [Scripting] Fixed a problem with

IComputerVisionExperimentalScriptingApinot being available in SDK - [Scripting] Reworked script loading process, should fix "Could not build the proper workspace state, please report this error"

- [Scripting] NuGet - improved package resolution mechanism, added more granular TFM-filtering

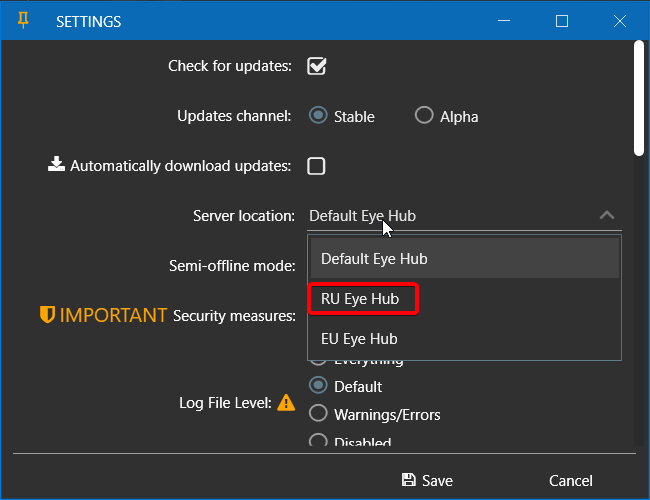

New RU hub

Added new RU hub which should allow to circumvent issues with RU-region accessibility. Please report if login still won't work.

Bugfixes/Improvements

- [Freeze] Fixed a problem with BT editor freezing when you switch between auras/BTs under some circumstances

- [UI] Fixed aura limit sometimes still being applied

- [UI] Semi-offline mode is now enabled by default. Plans to do full-offline are postponed for now due to security concerns, but are not off-the-table yet

- [ImGui] A lot of changes in the last 2 months, I would recommend to check out the actual latest version -

EyeAuras.ImGuiSdk,0.1.42- markdown editor, animations, composite buttons, fonts integration, images, caching, etc. - [Scripting] Blazor API improvements - added

RegisterViewType(Type viewType, Type dataContextType, object key = default)- this allows you to register your own UI widgets - [Scripting] Memory API - Huge number of changes in performance and underlying mechanisms. At this point you can consider Memory API stable and performant to do literally any kind of development you might want. Also added multiple injection mechanisms (CreateRemoteThread, APC and several other). Note that neither injection, nor memory reading will work on anticheat protected games, unless you bypass AC first or use MemoryAPI that bypasses AC checks (like DMA).

- [Scripting] Fixed a problem with

ScriptContainerExtensionsbeing re-registered by BT nodes. This lead to singletons not really being... singletons. - [Scripting] NuGet - Added DefaultNuGetPackageBlacklist which improves compatibility with old netstandard1.3 and netstandard2 packages

Новый релиз - 1.9 RU/EN

Масштабные изменения в скриптах и доступных API, переход на новую модель монетизации и многое другое.

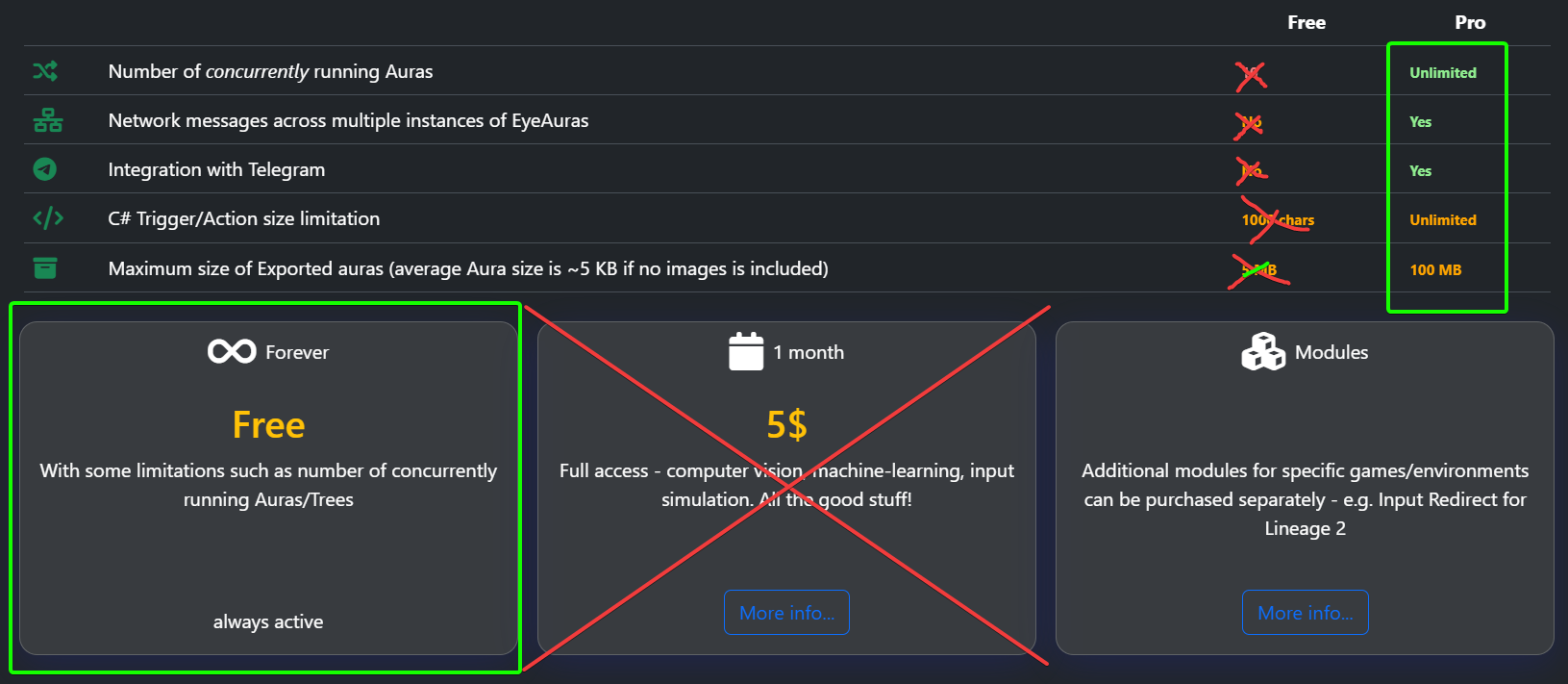

Изменения монетизации - Free становится Pro

Все ограничения, которые присутствовали во Free версии снимаются - более никакого лимита на количество аур, размер скриптов, интеграцию с Телеграмом и т.п.

p.s. Лицензия пока что нужна для отправки сетевых сообщений, однако и это ограничение тоже вскоре будет убрано, как только решу технические моменты, вероятнее всего к следующему релизу

Лицензия больше не нужна?

Да. Весь функционал, который был ранее закрыт лицензией теперь доступен всем. В самом ближайшем будущем с сайта будут убраны опции покупки лицензии.

Нужно ли соединение с сервером программы?

Пока что - да, это связано с тем, как программа защищает свои модули и пока что является неотъемлимой частью системы. В грядущем году я постараюсь пересмотреть этот подход, однако это займет время. Как минимум нужно будет сделать механизм, который позволяет использовать программу в оффлайне хотя бы несколько месяцев.

Почему?

С развитием механизма саблицензий и мини-приложений (https://eyeauras.net/share/S20251105201607zPUUkZryl4CY), мне кажется правильным сместить фокус в сторону монетизации готовых продуктов, собранных на базе программы, а саму программу при этом сделать бесплатной. Вся накопленная за 6 лет разработки база теперь доступна каждому, я надеюсь это подстегнет авторов, которые до этого сомневались "а стоит ли попробовать что-то сделать".

Дальнейшая разработка будет направлена на упрощение добавления модулей в программу - к примеру каких-то специфических симуляторов ввода, полезных для конкретной игры или готовых мини-приложений, решающих практические задачи. Все это уже можно делать сейчас, однако информации на эту тему мало и она разрозненная. Это будет исправлено.

Но я купил лицензию только недавно, можно манибэк?

Пишите в личку, постараюсь помочь с этим неудобством.

Что нас ждет в 2026

- улучшение пользовательского опыта при выпуске мини-приложений и других скриптов/наборов аур

- Unreal-like Blueprints - эта идея давно витает в воздухе и на базе одного из ботов, которого мы сейчас разрабатываем, я ее обкатаю. Суть в том, чтобы объединить механизм Аур и Деревьев поведения в одно целое, это позволит решать с помощью одного инструмента (блюпринтов) разнородные задачи

- Саблицензии - этот механизм уже работает, однако на данный момент многие его части мануальные, нужно их автоматизировать

- Больше документации - одна из основных проблем программы сейчас в том, что в ней слишком много всего, этот функционал накапливался годам и не всегда подробно документирован. В наше время, когда многие вопросы решаются через LLM (ChatGpt, Gemini и т.п.), качество и количество документации по API это определяющий фактор. Планирую выделить несколько недель исключительно на это, возможно привлечь специалиста

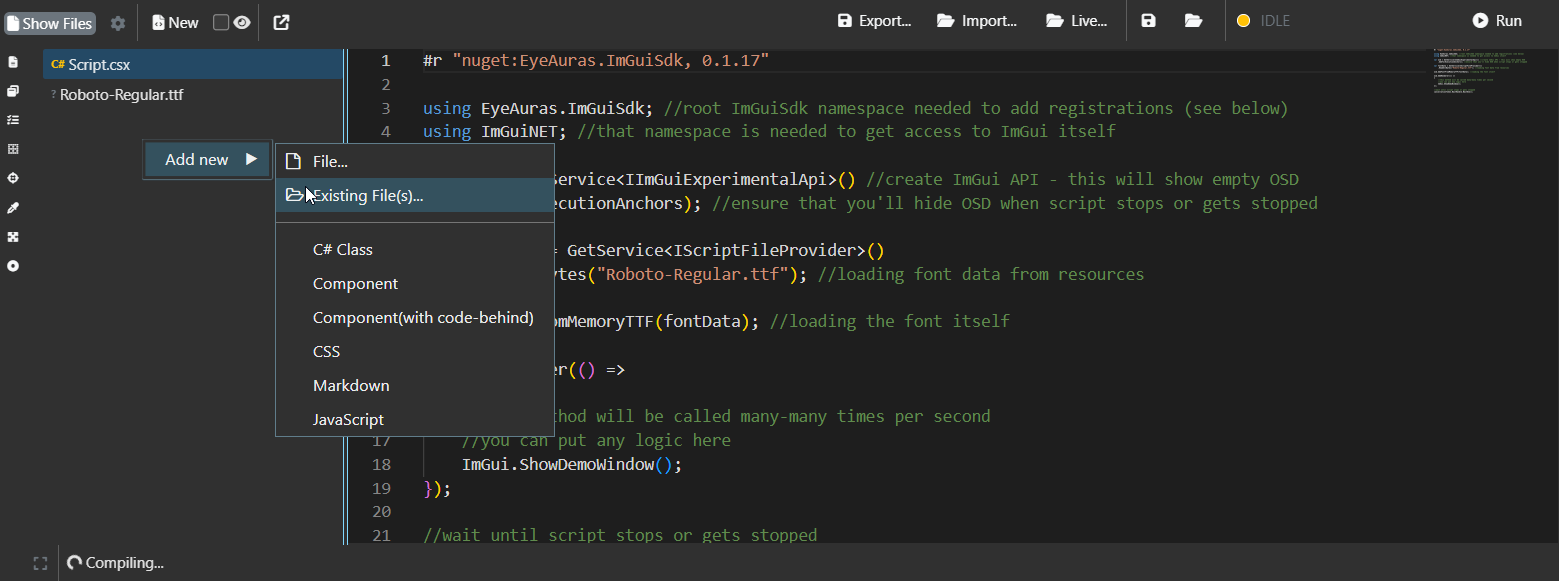

C# Scripting - Встроенные Ресурсы

В скрипты добавлен очень мощный механизм - теперь вы можете встраивать прямо в свой скрипт целые файлы, будь то картинки, видео, текст или даже DLL/EXE. И далее прямо из скрипта можно с ними работать - отображать в UI, загружать в Триггеры/Действия или просто распаковать на диск для дальнейшего использования. Особенно полезен такой функционал если вы хотите встроить в скрипт какую-то библиотеку, которой нет в NuGet - теперь достаточно приаттачить файл к скрипту и указать, чтобы программа использовала его как зависимость.

Более того, если вы используете C# Script protection, то эти ресурсы будут еще и зашифрованы как часть скрипта, тем самым защищая ваше авторское право и работу.

C# Scripting - Улучшения переменных

Доработки в системе перменных скриптов - слегка поменялся подход к обработке исключительных ситуаций, задача - сделать скриптинг более простым для новых пользователей.

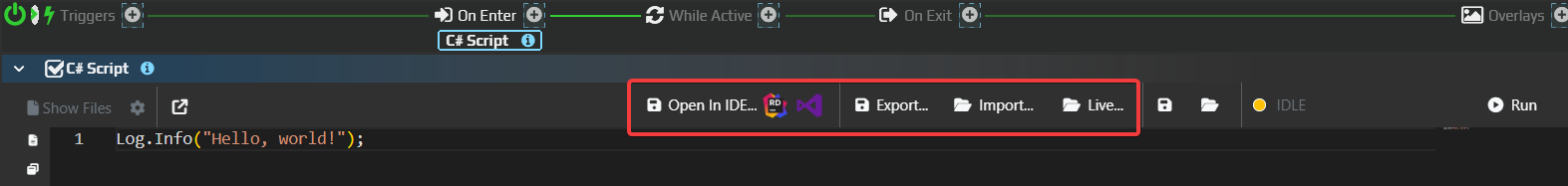

C# Scripting - Интеграция с IDE

Еще с прошлого года в программе есть режим интеграции с IDE, по факту это возможность писать полноценные программы, при этом оставаясь внутри инфраструктуры EyeAuras.

Вы можете редактировать скрипт прямо из JetBrains Rider / Visual Studio или любой другой среды разработки, в том числе и AI-based, к примеру Antigravity от Google. Это упрощает и ускоряет написание даже маленьких скриптов, не говоря уже о больших проектах.

Еще с прошлого года в программе есть режим интеграции с IDE, по факту это возможность писать полноценные программы, при этом оставаясь внутри инфраструктуры EyeAuras.

Вы можете редактировать скрипт прямо из JetBrains Rider / Visual Studio или любой другой среды разработки, в том числе и AI-based, к примеру Antigravity от Google. Это упрощает и ускоряет написание даже маленьких скриптов, не говоря уже о больших проектах.

Улучшения производительности

В этой версии более дюжины доработок и оптимизаций, направленных на ускорение времени загрузки и ускорении внутренних механизмов программы.

Тестирование на базе ImGui бота, использующего чтение памяти, показывает, что теперь можно оперировать в диапазоне 2к FPS, это ВКЛЮЧАЯ стоимость операций чтения памяти и парсинга всех необходимых игровых структур. До фиксов цифры были в районе 500 FPS.

Пожалуйста, сообщайте о любых проблемах!

Исправления/улучшения

- [Crash] Исправлена проблема, из-за которой окно лицензии падало при запуске

- [EyePad] «Недавние» теперь отсортированы по дате (по убыванию)

- [Scripting] Добавлена новая кнопка «Open in IDE», которая позволяет редактировать скрипт в Rider/Visual Studio и видеть изменения в EyeAuras в реальном времени — [подробнее здесь...](/scripting/ide-integration)

- [Scripting] Исправления Embedded Resources — сделали Script FileProvider более гибким (теперь понимает больше форматов путей)

- [Scripting] Исправлен баг в Live Import — теперь должно корректно определять изменения в

.csproj - [Scripting] Улучшено освобождение ресурсов скриптов — даже если в пользовательском скрипте остались «висячие» ссылки, EyeAuras постарается подчистить их, если скрипт больше не запущен

- [BehaviorTree] Исправлена проблема с циклическими ссылками — из-за неё UI показывал соединения, которых на самом деле нет

- [BehaviorTree] Добавлен новый узел — SetVariable. Он всё ещё на очень ранней стадии, но я решил выкатить его раньше, просто чтобы протестировать

- [BehaviorTree] Улучшена производительность BT

- [SendInput] Исправлена проблема с драйвером TetherScript — на некоторых разрешениях/DPI клики мышью ещё и слегка сдвигали курсор (+-1px). Ещё момент: пожалуйста, убедитесь, что используете совместимую версию драйвера, чтобы всё работало как ожидается — HVDK 2.1, подробнее на этой странице

Bugfixes/Improvements

- [Scripting] Improved NuGet packages resolution - should solve problem of baseline assemblies conflicting with packaged ones

- [Scripting] MemoryAPI - added

NativeLocalProcess- very similar toLocalProcess, but uses a bit lower-level reading technique and allows to use handle to the process as a starting point - [Scripting] MemoryAPI - added DLL injection via manual mapping - allows to avoid some detection vectors

Bugfixes/Improvements

ALPHA! Performance improvements

This version contains more than a dozen different improvements and performance optimizations which I have preparing for quite some time at this point. For the next couple of weeks there may be instabilities, but for most use cases, especially for scripting in EyePad, this should be night and day difference.

Tested improvements on ImGui-based bot - it is now possible to push 2k fps range. That is WITH memory reading and parsing all needed game structures! So, basically, that is bot-loop running at ~2k ticks per second - before these fixes FPS was around 400-500.

Next goal will be working on stabilizing the FPS and making it smoother, right now it is quite "spiky" - I already wrote about adapting new prototype-GC in changelog for 1.7.8559 a couple of months ago, this should help exactly with that goal.

Please report anything you'll notice!

Bugfixes/Improvements

- [Scripting] Improved Scripts deallocation - even if user script contains some dangling references, EyeAuras will try to clean them up if the script is no longer running

- [BehaviorTree] Improved performance in BTs

Bugfixes/Improvements

- [Scripting] Embedded Resources fixes - made Script FileProvider more flexible (now understands more formats of paths)

- [Scripting] Fixed bug in Live Import - now should property detect changes in

.csproj - [EyePad] Recents are now ordered by date(desc)

- [BehaviorTree] Added new node - SetVariable, it is still in very early phase, but I decided to drop it earlier just to test it out

Wiki

C# Scripting - IDE Integration

- IDE Ingegration - details about integration with IDEs (Rider/Visual Studio) via LiveImport

Bugfixes/Improvements

- [Crash] Fixed a problem with License window crashing on startup

- [Scripting] Added new button "Open in IDE", which allows to edit the script in Rider/Visual Studio and see them reflected in EyeAuras in real-time - more here...